Conan Mercer Site Reliability Engineering Leader

SQS FIFO - Why It Might Not Be What You Want It To Be

13 Dec 2024 - Conan Mercer

The Role of Queuing Systems in Software Projects

At some point in a software project, the need to solve a problem involving a queuing system is likely to arise. Upon closer examination, it often becomes clear that the queue must handle duplicate messages, and the system as a whole should not be constrained by the queue's throughput capacity.Types of Queues

In an AWS environment, Amazon SQS (Simple Queue Service) is a popular choice because it abstracts much of the complexity involved in handling and processing messages in a queue.As one of AWS's oldest services, SQS offers two types of queues: Standard and FIFO (First In, First Out).

According to AWS documentation, when using a Standard queue, both the publisher and subscriber must manage idempotency—the guarantee that performing the same operation multiple times produces the same result.

For FIFO queues, however, idempotency is less explicitly emphasized, which might lead to the assumption that it is unnecessary. In reality, FIFO queues only guarantee deduplication within a five-minute window, so additional measures must be taken to ensure idempotency beyond this period.

By design, FIFO queues process messages in strict order, which can introduce a bottleneck to the overall system. Standard queues, on the other hand, support significantly higher throughput. For instance, Standard queues can handle up to 120,000 in-flight messages per queue, whereas FIFO queues are limited to 20,000 in-flight messages. When high throughput is required, relying solely on a FIFO queue may lead to load shedding, where the system drops excess messages to manage capacity constraints.

Time Is Precious—and Expensive

Time spent in a queue is valuable. If a message sits in a FIFO queue only to be removed before processing due to a deadline constraint, that time is wasted. This inefficiency is compounded if the message is being processed when it expires, as compute resources are consumed without yielding a result.

To mitigate such inefficiencies, one approach involves estimating a message's likelihood of exiting the queue successfully, based on the queue's current load, before it is even added. While this technique can reduce wasted effort, it is inherently approximate and risks rejecting messages that could have been processed successfully. This strategy exemplifies load shedding, where the system deliberately drops lower-priority tasks to maintain performance.

Understanding FIFO Queues Through Simple Math

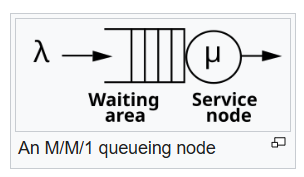

The behaviour of FIFO queues can be explained using basic mathematics. Consider a simple setup with a single worker handling tasks. New tasks arrive randomly at an average rate denoted by λ (lambda). Each task requires a certain amount of time to complete, with the average service time being 1/μ (mu). This system is known as an M/M/1 queue and is straightforward to analyse mathematically.

The ideal scenario occurs when λ≤μ. This means that the rate at which work enters the queue λ is less than or equal to the rate at which the system can process it (μ). In this case, the queue will not overflow, and the system remains stable.

However, when λ>μ, the amount of work entering the queue exceeds the system’s processing capacity on average. This results in the queue filling up indefinitely, which is certainly undesirable. Even when λ<μ , a buildup can still occur because λ and μ are averages. Temporary spikes in workload can cause λ to exceed μ for short periods, leading to transient congestion.

Under high traffic loads, FIFO queues can grow significantly, potentially causing messages to exceed their processing deadlines. If the system cannot wait long enough to process these messages, they may be removed from the queue without being acted upon.

In extreme cases, this could lead to nearly all messages being dropped without any actual work being performed.

Moreover, FIFO SQS queues are more expensive than Standard queues, so switching to Standard queues not only reduces costs but can also improve efficiency by avoiding some of the bottlenecks associated with FIFO processing.

I may go into more detail in the future, but for now, consider FIFO when you are considering FIFO.